Building My Own Transformer Model

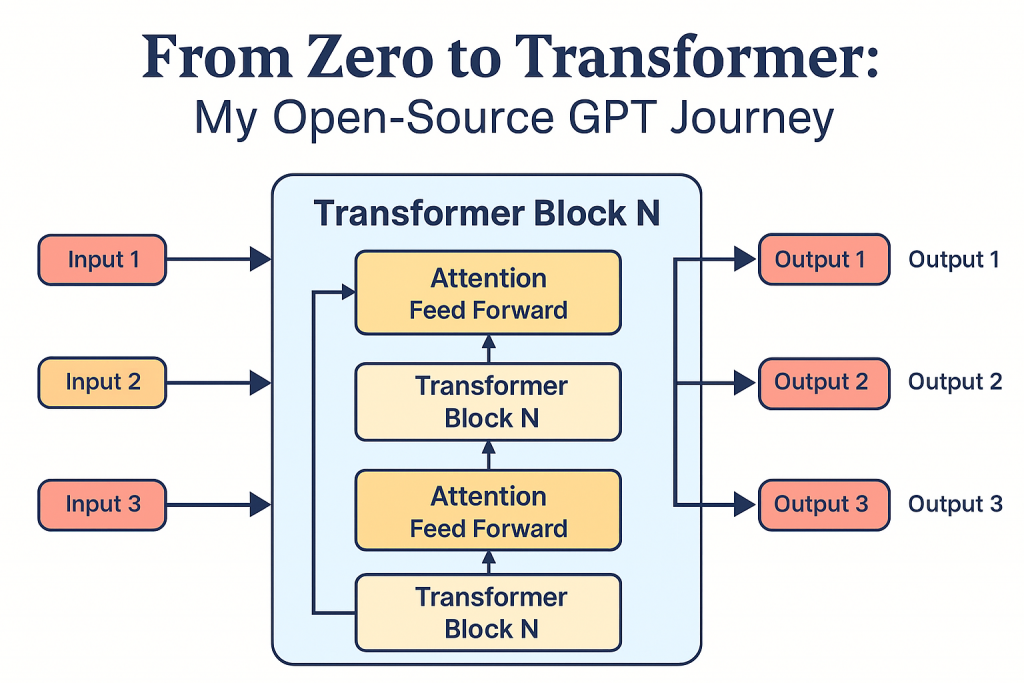

I’ve decided to take on a challenge that’s equal parts exciting and intimidating: building my own open-source transformer model from scratch: something in the spirit of GPT-OSS.

Right now, I have basic machine learning skills and a working knowledge of Python. Over the coming weeks and months, I’ll be diving deep into the theory, architecture, and engineering practices needed to go from “just enough ML to be dangerous” to training, packaging, and releasing a working transformer model that anyone can run, study, and improve.

📅 The Roadmap Ahead

I’ve mapped out a structured, 16-week plan that takes me from fundamentals to a fully functioning model:

- Phase 1: Strengthen Python for ML, master the basics of deep learning, and get comfortable with PyTorch.

- Phase 2: Understand transformer internals, implement a MiniGPT from scratch, and train it on a small dataset.

- Phase 3: Learn Hugging Face tooling, study GPT-OSS internals, and package my own model for open-source release.

🛠 Building in Public

I’ll be sharing regular technical updates here — including:

- Code snippets and architectural diagrams

- Lessons learned (and mistakes made)

- Benchmarks, performance tuning, and deployment notes

- Thoughts on ethical and responsible AI design

If you’re curious about transformers, want to follow along with a real-world, from-zero build, or are working on something similar, I’d love for you to join me on this journey.

Series Outline

I have loosely mapped the phases to weeks to come up with the following detailed learning and implementation plan:

Phase 1: Foundations

Goal: Build the coding, data handling, and ML fundamentals to support deep learning work.

- Week 1:Python for ML — The Essentials

- Data structures, functions, decorators, file I/O

- CLI tool: YAML → JSON converter

- Why clean, modular code matters for ML projects

- Week 2:Data Wrangling & Visualization

- NumPy, pandas, matplotlib

- Cleaning and visualizing a dataset

- Building intuition for data distributions

- Week 3:ML Fundamentals

- Gradient descent, loss functions, overfitting

- Logistic regression from scratch

- Visualizing decision boundaries

- Week 4: PyTorch Basics

- Tensors, autograd,

nn.Module - MNIST classifier with custom layers

- Saving/loading models

- Tensors, autograd,

Phase 2: Transformer Theory & MiniGPT

Goal: Understand transformer internals and implement a small GPT model.

- Week 5:Attention Is All You Need — Literally

- Self-attention, multi-head attention

- Implementing scaled dot-product attention

- Visualizing attention weights

- Week 6:Inside the Decoder Stack

- Positional encoding, LayerNorm, residuals

- Why GPT is decoder-only

- Building a transformer block

- Week 7:Tokenization & Data Prep

- Byte Pair Encoding (BPE)

- Training a tokenizer on Shakespeare

- Creating a PyTorch

DatasetandDataLoader

- Week 8:Building MiniGPT

- Stacking transformer blocks

- Causal masking for autoregressive models

- Forward pass → logits

- Week 9:Training MiniGPT

- Training loop, optimizer, scheduler

- Gradient clipping, checkpointing

- Sampling strategies (greedy, top-k, nucleus)

- Week 10: Evaluating & Tuning

- Perplexity, BLEU, ROUGE

- Comparing sampling outputs

- Lessons learned from first training run

Phase 3: Scaling & OSS Integration

Goal: Learn large-scale model tooling, integrate with Hugging Face, and release your own OSS model.

- Week 11:Hugging Face Transformers 101

- Loading pretrained models/tokenizers

- Fine-tuning GPT-2 on your dataset

- Creating a custom pipeline

- Week 12:Pushing to the Hub

- Model cards, README, licensing

- Uploading to Hugging Face Hub

- Sharing inference demos

- Week 13:Inside GPT-OSS

- Architecture and scaling laws

- Quantization formats (MXFP4, bfloat16)

- Running GPT-OSS locally

- Week 14:Serving Models at Scale

- vLLM,

transformers serve - Benchmarking latency and throughput

- Dockerizing inference

- vLLM,

- Week 15:Packaging Your Model

- OSS license selection

- Documentation best practices

- Example notebooks

- Week 16: Public Release

- Deploying to cloud (Azure/GCP/AWS)

- Announcing on GitHub, Hugging Face, LinkedIn

- Inviting community contributions

📢 Follow Along

I’ll post updates as I complete each phase, so you can see the process unfold step-by-step.

Whether you’re a beginner curious about ML, a researcher interested in OSS models, or an engineer building AI systems at scale, there’ll be something here for you.

Let’s see where this road takes us.

Leave a Reply