In Part 1 of this series, I built a simple neural network for binary and multiclass classification to get comfortable with the fundamentals of deep learning. For Part 2, I shifted focus to something equally important in the world of transformers: tokenization.

Transformers do not work directly with raw text. They need text to be broken down into smaller units called tokens, which are then mapped to numerical IDs. This process is handled by a tokenizer. Modern tokenizers like Byte Pair Encoding (BPE) or WordPiece are highly optimized, but I wanted to understand what happens under the hood. So I built a MiniTokenizer from scratch in Python.

This project is not meant to replace production-grade tokenizers like tiktoken or Hugging Face’s tokenizers. Instead, it is a learning tool that demonstrates the fundamentals of how text becomes numbers in an NLP pipeline.

Why Build a Tokenizer

Tokenization is the bridge between human-readable text and machine-readable numbers. Without it, models cannot process language. By building my own tokenizer, I learned:

- How to split text into meaningful units

- How to construct a vocabulary mapping tokens to integer IDs

- How encoding and decoding work in practice

- Why handling unknown tokens is essential

- How regex can be used for simple text processing

This exercise gave me a deeper appreciation for the complexity of modern tokenizers and prepared me for using BPE-based tokenizers in the actual GPT model.

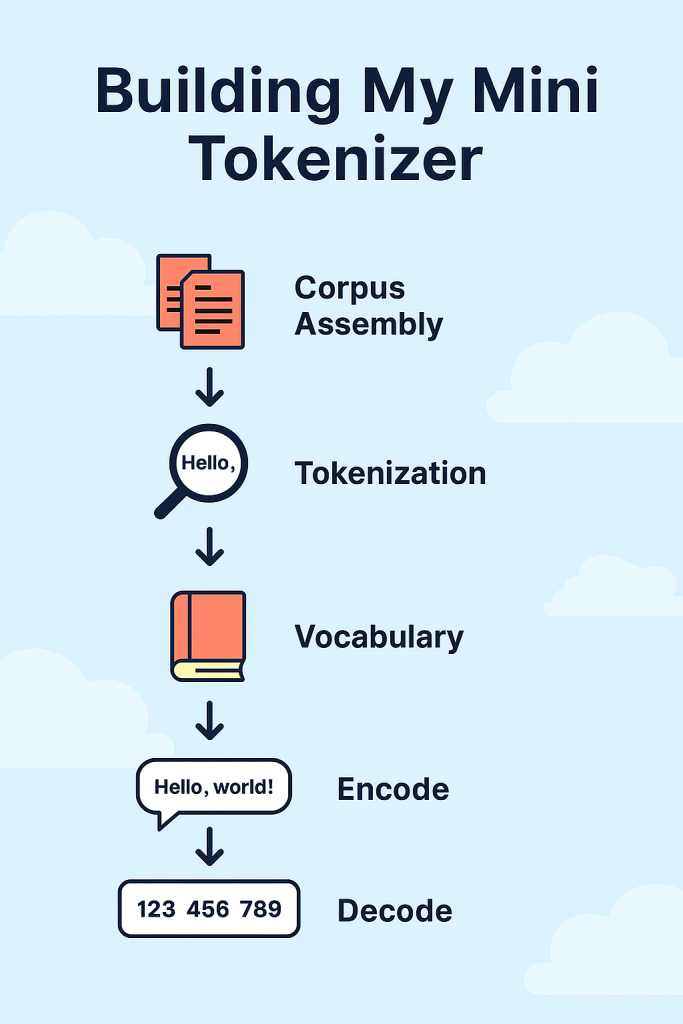

How the MiniTokenizer Works

The MiniTokenizer is implemented in a Jupyter notebook and uses only Python’s standard library. Here are the main steps:

1. Corpus Assembly

All .txt files in a directory are concatenated into a single corpus. An (end-of-sequence) token is added between documents.

files = Path('./texts').glob('*.txt')

with open('all_text.txt', 'w', encoding='utf-8') as outfile:

for file in files:

outfile.write(Path(file).read_text(encoding='utf-8') + '<EOS>')2. Tokenization

The text is split using a regular expression that separates punctuation, whitespace, and special characters into their own tokens.

tokenized_text = re.split(r'([,.!?():;_\'"]|--|\s)', raw_text)3. Vocabulary Construction

A vocabulary is built by mapping each unique token to an integer index. An <UNK> token is added to handle unknown tokens.

all_tokens = sorted(set(tokenized_text))

vocab = {token: index for index, token in enumerate(all_tokens)}

vocab.update({'<UNK>': len(vocab)})4. Encoding and Decoding

The MiniTokenizer class provides methods to encode text into token IDs and decode token IDs back into text.

class MiniTokenizer:

def __init__(self, vocab):

self.vocab = vocab

self.inverse_vocab = {index: token for token, index in vocab.items()}

def encode(self, text):

tokens = re.split(r'([,.!?():;_\'"]|--|\s)', text)

return [self.vocab.get(token, self.vocab['<UNK>']) for token in tokens]

def decode(self, token_ids):

return ''.join([self.inverse_vocab[token_id] for token_id in token_ids])Example Usage

# Initialize the tokenizer

tokenizer = MiniTokenizer(vocab)

# Encode text

text = "Hello, world! This is a test of how well the tokenizer works."

token_ids = tokenizer.encode(text)

print(token_ids)

# Decode back to text

decoded_text = tokenizer.decode(token_ids)

print(decoded_text)Output:

[7357, 10, 0, 3, 7182, 4, 0, 3, 1335, 3, 4267, 3, 1473, 3, 6587, 3, 4955, 3, 3929, 3, 7092, 3, 6602, 3, 7357, 3, 7181, 12, 0]

<UNK>, world! This is a test of how well the <UNK> works.What I Learned

- Tokenization is not trivial. Even a simple regex-based tokenizer requires careful handling of punctuation, whitespace, and unknown tokens.

- Vocabulary construction is critical. The way you build and prune your vocabulary directly affects model performance.

- Encoding and decoding must be consistent. If the mapping is not reversible, the model cannot reliably generate text.

- Modern tokenizers like BPE solve many of the limitations of word-level tokenization by breaking words into subwords, which improves handling of rare and unseen words.

Next Steps

In the actual GPT model, I will use a BPE-based tokenizer such as tiktoken. BPE tokenizers are more efficient and better suited for large-scale language models. However, building this MiniTokenizer gave me the intuition I need to understand how those more advanced tokenizers work.

Try It Yourself

The code is available on GitHub. You can clone the repository, add your own text files, and experiment with encoding and decoding.

👉 MiniTokenizer on GitHub

Build It Yourself

If you want to try building it yourself, you can find the complete code with detailed explanations of each block in the source code section at the end of this post. All the best!

Closing Thoughts

This project was a valuable step in my GPT-from-scratch journey. By building a tokenizer, I now understand how raw text is transformed into the numerical sequences that power deep learning models. In the next part of the series, I will begin exploring the transformer architecture itself, starting with attention mechanisms.

Stay tuned for Part 3.

Source Code

from pathlib import Path

import re

📚 Corpus Assembly: Merging Text Files for Tokenization¶

This step combines multiple source documents into a single text file, preparing a unified corpus for downstream tokenization and analysis.

Inputs¶

- Directory:

texts/containing.txtfiles (UTF-8 encoded). - Pattern: All files matching

*.txtare included; subfolders are ignored.

Process Overview¶

- Enumerate Files: Use

Path('./texts').glob('*.txt')to list all text files in the directory. - Read & Concatenate: For each file, read its contents as UTF-8 and append to a single output file.

- Separation: Add a end of sequence token (

<EOS>) after each file to ensure clear separation between documents. - Output: Write the combined result to

all_text.txtin the project root.

Why This Step?¶

- Consistency: Ensures all data is in one place for easier processing and reproducibility.

- Efficiency: Downstream scripts (tokenizers, analyzers) can operate on a single file, simplifying I/O.

- Flexibility: Easy to add or remove source files by updating the

texts/folder.

Validation & Tips¶

- Check the size of

all_text.txtto confirm all data was written. - Open the file and inspect the start/end of each document for encoding or separator issues.

- For reproducible order, use

sorted(Path('./texts').glob('*.txt')). - To add metadata, consider writing the filename or a header before each document.

Next Steps¶

- Use

all_text.txtas input for tokenizer training, vocabulary building, or text analysis. - Optionally, preprocess the text (e.g., normalization, lowercasing) before tokenization if required by your workflow.

files = Path('./texts').glob('*.txt')

with open('all_text.txt', 'w', encoding='utf-8') as outfile:

for file in files:

outfile.write(Path(file).read_text(encoding='utf-8') + '<EOS>')

📏 Corpus Character Count: Quick Data Integrity Check¶

After merging your text files into all_text.txt, it’s important to verify that the corpus was assembled correctly and contains the expected amount of data. This cell performs a simple but effective validation by reading the entire file and reporting the total number of characters.

Purpose¶

- Sanity Check: Confirms that

all_text.txtis not empty and that the concatenation process worked as intended. - Data Integrity: Helps detect issues such as missing files, encoding errors, or incomplete writes.

- Baseline Metric: Provides a reference point for future preprocessing or modifications.

What This Cell Does¶

- Opens

all_text.txtin read mode with UTF-8 encoding. - Reads the entire file content into a string variable (

raw_text). - Prints the total number of characters in the corpus.

How to Use the Output¶

- Expected Value: The character count should be large and nonzero. If it’s unexpectedly small, check your

texts/directory for missing or empty files. - Troubleshooting: If you encounter encoding errors, ensure all input files are UTF-8 encoded.

- Scaling: For very large corpora, consider reading the file in chunks or using file size in bytes (

os.stat('all_text.txt').st_size) instead.

Next Steps¶

- Use

raw_textas input for tokenization, vocabulary extraction, or further text analysis. - Optionally, perform additional validation (e.g., line count, previewing the start/end of the file) to further ensure data quality.

with open('all_text.txt', 'r', encoding='utf-8') as input_text:

raw_text = input_text.read()

print(f"Total number of characters in the raw text: {len(raw_text)}")

Total number of characters in the raw text: 330569

🧮 Tokenization and Vocabulary Construction¶

This cell performs the core tokenization step and builds a vocabulary from your assembled corpus. It splits the raw text into tokens, counts them, and constructs a mapping from each unique token to a unique integer index.

What This Cell Does¶

Tokenization:

- Uses a regular expression with

re.splitto break the text into tokens. - The pattern splits on common punctuation marks (

[,.!?():;_'""]), double dashes (--), and whitespace (\s). - This approach preserves punctuation as separate tokens and ensures that words, punctuation, and spaces are all represented.

- Uses a regular expression with

Token Count:

- Prints the total number of tokens generated from the corpus.

- Useful for understanding the granularity and size of your tokenized data.

Unique Tokens:

- Converts the token list to a set to extract all unique tokens.

- Sorts them for reproducibility and prints the total count.

- This gives you the vocabulary size, a key metric for language modeling and analysis.

Vocabulary Mapping:

- Creates a dictionary (

vocab) mapping each unique token to a unique integer index. - This mapping is essential for converting text into numerical form for machine learning models.

- Adds a special (

<UNK>) token to the end of the vocabulary to handle unknown tokens.

- Creates a dictionary (

Tips & Customization¶

- Regex Tuning: Adjust the regular expression to better fit your language or domain (e.g., handle contractions, special symbols, or multi-word expressions).

- Whitespace Handling: The current pattern includes whitespace as tokens. If you want to ignore or merge whitespace, modify the regex accordingly.

- Vocabulary Filtering: For large corpora, consider filtering out rare tokens or applying additional normalization (e.g., lowercasing, stemming).

Next Steps¶

- Use the

tokenized_textlist for further processing, such as sequence modeling or n-gram analysis. - The

vocabdictionary can be saved and reused for encoding new text or training models. - Analyze token frequency distributions or visualize the most common tokens for insights into your corpus.

tokenized_text = re.split(r'([,.!?():;_\'"]|--|\s)', raw_text)

print(f"Total number of tokens: {len(tokenized_text)}")

all_tokens = sorted(set(tokenized_text))

print(f"Total number of unique tokens: {len(all_tokens)}")

vocab = {token : index for index, token in enumerate(all_tokens)}

vocab.update({'<UNK>' : len(vocab)})

Total number of tokens: 143975 Total number of unique tokens: 7357

🧩 MiniTokenizer Class: Encoding and Decoding Text¶

This cell defines the MiniTokenizer class, which provides simple methods to convert text into sequences of token IDs (encoding) and to reconstruct text from token IDs (decoding) using the vocabulary built in previous steps.

Class Overview¶

Initialization (

__init__):- Takes a

vocabdictionary mapping tokens to unique integer indices. - Builds an

inverse_vocabdictionary for reverse lookup (index to token), enabling decoding.

- Takes a

Encoding (

encodemethod):- Splits input text into tokens using the same regular expression as before (

re.split(r'([,.!?():;_\'"]|--|\s)', text)). - Converts each token into its corresponding integer ID using the vocabulary.

- Adds the special token (

<UNK>) if an unkown token is encountered. - Returns a list of token IDs representing the input text.

- Splits input text into tokens using the same regular expression as before (

Decoding (

decodemethod):- Converts a list of token IDs back into tokens using

inverse_vocab. - Joins the tokens into a string, separated by spaces.

- Returns the reconstructed text.

- Converts a list of token IDs back into tokens using

Usage Example¶

tokenizer = MiniTokenizer(vocab)

ids = tokenizer.encode("Hello, world!")

print(ids) # Encoded token IDs

print(tokenizer.decode(ids)) # Decoded text (may include extra spaces)

class MiniTokenizer:

def __init__(self, vocab):

self.vocab = vocab

self.inverse_vocab = {index : token for token, index in vocab.items()}

def encode(self, text):

tokens = re.split(r'([,.!?():;_\'"]|--|\s)', text)

token_ids = [self.vocab[token] if token in self.vocab else self.vocab['<UNK>'] for token in tokens]

return token_ids

def decode(self, token_ids):

text = ''.join([self.inverse_vocab[token_id] for token_id in token_ids])

return text

tokenizer = MiniTokenizer(vocab)

ids = tokenizer.encode("Hello, world! This is a test of how well the tokenizer works.")

print(ids) # Encoded token IDs

print(tokenizer.decode(ids)) # Decoded text (may include extra spaces)

[7357, 10, 0, 3, 7182, 4, 0, 3, 1335, 3, 4267, 3, 1473, 3, 6587, 3, 4955, 3, 3929, 3, 7092, 3, 6602, 3, 7357, 3, 7181, 12, 0] <UNK>, world! This is a test of how well the <UNK> works.

Leave a Reply